How to Build a Retrieval-Augmented Generation (RAG) in Python

(DRAFT: This is a first draft. But I wanted to make it available to readers now. Thanks!)

A Retrieval-Augmented Generation (RAG) system is an AI system that starts with generative models (like LLMs and ChatGPT) and adds in external information, such as a corporate knowledge base, effectively "augmenting" the LLM with the additional information. A great example is a copmany knowledge base. Imagine you want to build a system that lets users search a company knowledge base, not on specific keywords found in it, but general concepts such as "How do I file for an extended leave?" The term "extended leave" might not be word-for-word in the corporate documentation. But because today's generative AI is so good at interpreting language, we can build a tool that can find the answer anyway, and, using the information found in the corporate documentation, generate a proper resopnse that's worded as if it was spoken by a human.

In order to make this happen, we need to start with an LLM, but then "augment" it with the additional information from the corporate documentation.

As LLMs are initially pre-trained on large data sets and then "fine tuned," they learn only the information given to them in the training data. While they're good at coming up with new and creative ideas, the ideas they generate are limited to what they've been trained on. And by the time you start using an LLM, such as through ChatGPT, the information the LLM knows is already fixed.

But RAGs, on the other hand, allow you to augment the knowledge with whatever new information you want, without adding to the LLM's knowledge itself, effectively combining this new knowledge with the information already in the LLM.

But wait! Don't LLMs learn as they go?

Not in the sense people seem to think. As you're interacting with ChatGPT, asking questions, and sharing information with it, it's not actually learning new material from you. This is a popular misconception. It is temporarily learning new information that it retrieves through your current chat session, but that information doesn't get stored in the LLM. After you close your session, any information it might have learned goes away with the session.

RAGs need Two Models

Most people are familiar with LLMs -- they're the models used by tools like ChatGPT. But in order to process your own set of documents, you need a second type of model called a Sentence Transformer. Here's a very brief explanation of the difference.

An LLM processes sentences and paragraphs by breaking them up into individual words and even sub-words called tokens. The LLMs process entire paragraphs as indivdiual such tokens, one by one. As they "read" a prompt, they build a coherent understanding of the question being asked.

As they build a response, they do the same, but in reverse. Because of the sheer size of the information they've been trained with, they're able to predict the first word (or token) of a response. Then they take that single word and send it back into the processing along with the original prompt, and generate a second word. Then they do it all again, and generate a third word. Each word (again, token really) they generate is based on probability of what the most likely next token will be. And that's how they generate a response.

But in order to augment an LLM with additional information, we don't want to process individual words. We want to process entire sentences. That's where the second model, the sentence transformer, comes in.

The sentence transformer takes a sentence based on its context within a paragraph, and builds a sequence of numbers representing the sentence, called a sentence embedding. This embedding is essentially a numerical representation of the sentence, along with context.

Why does context matter? Because a sentence could have a completely different meaning when used differently. The most common example I've seen in the documenation about sentence transformers is this:

"I saw her duck."

What does that mean? Well, it depends on the situation.

If you're at a county fair, and a girl is showing off one of her ducks she raised, it would mean you saw the duck she's presenting.

But if you're at a game of dodgeball, and she's out there playing, and the ball flies past her, but misses because she ducked, then "I saw her duck" would mean something completely different in this situation. When searching a corporate knowledge base, context can be vital if you want your RAG system to provide a correct answer.

Searching for the document, versus constructing a proper response

I've written extensively about sentence transformer libraries, but I'm always careful to specify whether I'm talking about a true RAG or not, as there are two levels we can take such a system to:

- The system can locate the document with the answer

- The system can generate an answer based on the prompt, combined with what the documentation has to say about it.

The first one stops short of being a true RAG; instead, for example, the user might put in a search, and be presented with a quote from a paragraph in the documentation. That's not technically a RAG yet. The second one is, however, in that the system will then generate a human-sounding response. But we have to be careful, since it's possible the generated response could potentially be wrong in the same way that ChatGPT and similar tools can produce wrong answers.

Let's build the app!

It's shockingly easy to build an app that accomplishes all of the above, because a Python library called SEntence Transformers takes care of most of the details for us.

So let's build the app, play with it a bit, and then talk about some of the details that are happening behind the scenes. Why? Because if you really want to become an expert in this field, you can't just let the library handle it all blindly. You need to know a little bit about what's happening "under the hood."

Let's do this in Google Colab!

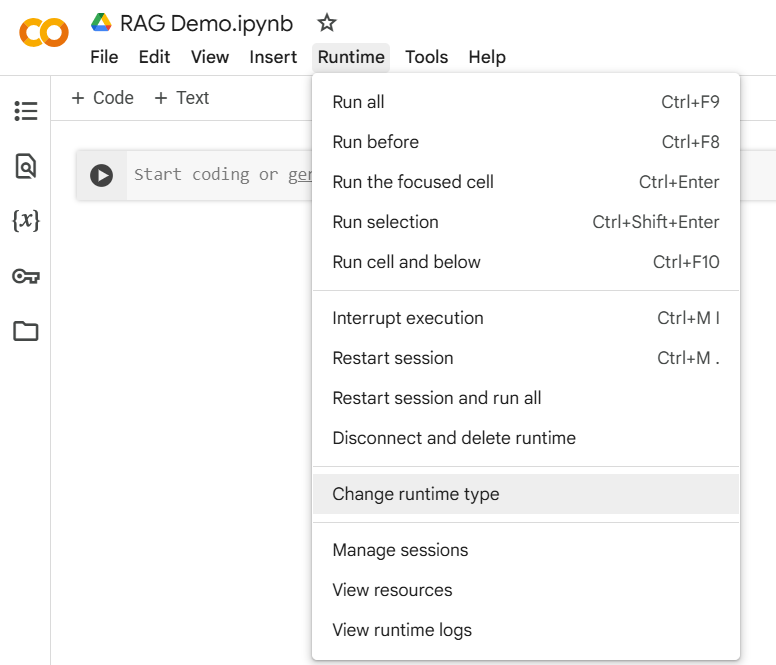

Open up Google Colab (http://colab.research.google.com/) and create a new notebook. Then switch to a GPU backed runtime by clicking Runtime -> Change runtime type:

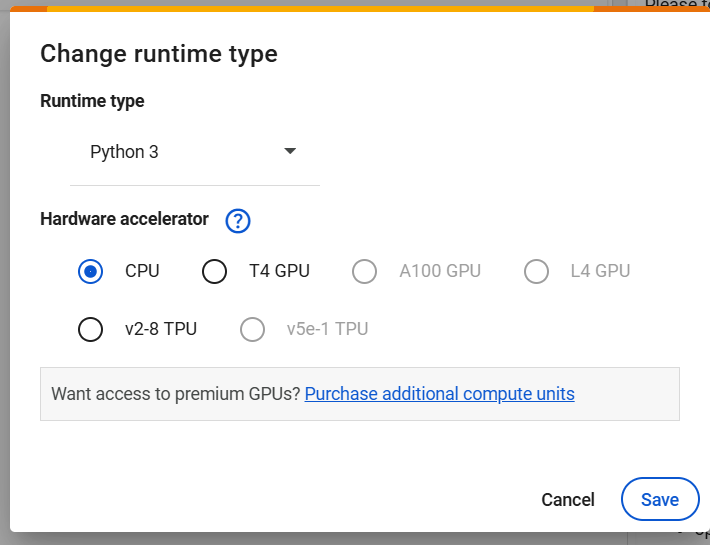

In the popup that opens, click T4 GPU:

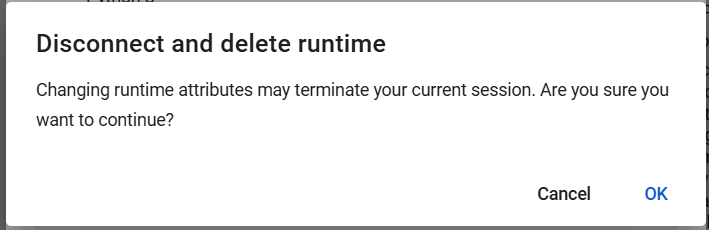

You'll see an immediate second popup:

Click OK. Then back in the first popup, click Save.

Let's verify that the GPU is functioning and includes the CUDA system from NVIDIA. In the first code cell enter the following:

import torch

print(torch.cuda.is_available())

print(torch.cuda.get_device_name(0))

print(torch.cuda.get_device_properties(0))

Run the cell. This will print out the specs. Currently I'm seeing the following:

True

Tesla T4

_CudaDeviceProperties(name='Tesla T4', major=7, minor=5, total_memory=15095MB, multi_processor_count=40, uuid=8836a4c3-cd86-e5f3-4b8c-512cb2f847dd, L2_cache_size=4MB)

I'm seeing a Tesla T4 GPU with a bit over 15GB available. According to this site (https://www.nvidia.com/en-us/data-center/tesla-t4/), this is GPU has 320 tensor cores and 2560 CUDA cores. This should work quite well!

Now let's add a special library that handles much of the heavy lifting including sentence transformers, vector database storage, and more. It's called ChromaDB (you can find out more about it at https://docs.trychroma.com/docs/overview/introduction.) ChromaDB has the following features that we need here:

- Generate vector embeddings for your documents (it uses the Sentence Transformer library for this)

- Download and install a sentence transformer model (You can choose which one; by default it uses a popular one called all-MiniLM-L6-v2; for most of your work you can probably just use this default one)

- Store vector embeddings in a configurable database of your choice. (The default is sqlite3)

- Read a prompt or query and convert it to an embedding, and then search the documents using Cosine Similarity for ones the appear close.

This library isn't normally included in Google Colab. So let's add it. At the top, create a code cell with just this in it:

!pip install chromadb

(The exclamation point tells Jupyter Notebooks to drop to a shell instead of Python and run the command as a shell command. Also tote that the order of the cells isn't really all that important, but we'll put them in order of how you would likely run them.)

You'll probably get a popup notification that the runtime needs to be restarted; go ahead and click the button to restart it.

To help us with some sample data, I asked ChatGPT to put together a couple chapters of a sample employee handbook, and I put it in a public repo in GitHub.

So let's build our sample!

First, let's just do everything up to but not including the final interaction with ChatGPT. We'll begin by reading in the corpus (in our cases, the fake employee handbook). Then we'll divide it up into smaller chunks that the Sentence Transformers library will process separately. The general rule of thumb here is to divide up your corpus into chunks that are standalone pieces of information. In our cases, that pretty much means each paragraph or section. (I've separated each section with lines that look like "---" which will make breaking it up easier with python.)

Here's the entire code that you'll want to put in its own cell; I'll break it down piece by piece next.

import chromadb

import urllib.request

# Load the corpus

url = 'https://github.com/wecodian/rag_demo_python/raw/refs/heads/main/employee_handbook.txt'

with urllib.request.urlopen(url) as response:

file_content = response.read().decode('utf-8')

documents = file_content.split('---')

# Initialize Chroma

chroma_client = chromadb.PersistentClient(path="./chroma_db")

collection = chroma_client.get_or_create_collection(name="documents")

collection.add(

documents=documents,

ids=[str(i) for i in range(len(documents))]

)

print()

query = input('What would you like to know?')

results = collection.query(query_texts = [query], n_results = 1)

print(results['documents'][0][0])

The first two lines import the ChromaDB library and a request library. The request library is so you can grab the corpus, which I made available in a GitHub repo; the code that follows reads it in, and splits it based on the "---" characters I put between each section.

Next I initialize the ChromaDB. By default it uses sqlite3 for his storage engine. While practicing with ChromaDB, I recommend that you continue using sqlite3. The file called "chroma_db" is the storage file for sqlite3.

The next line creates a collection. The collection holds the individual documents from our corpus. (I'll say more about how big to make these later in this post.) ChromaDB is capable of managing multiple collections, although typically for smaller projects you'll only create one collection.

Then we add our documents to the collection. Note the second parameter. (I'm assuming you're familiar with list comprehension; if not, read about it here https://www.w3schools.com/python/python_lists_comprehension.asp.) This second parameter is a list of IDs that align with the documents. These IDs have to be strings, and for this example I'm just numbering them so they match up with the index of the documents in the initial list. Hence the odd looking construction.

Also in this step, ChromaDB reads the documents, generates sentence embeddings for them, and stores the embeddings in the sqlite3 database.

And that's it! You've created the whole system at this point. Now we're ready to query it. To do so, we just use a simple input() to get the user query. And then we query the documents. In this example, I'm only asking it to return one document. But you can have it return as many as you want, ordered starting with the best match.

And finally I print out the document. The results object is a dictionary that contains the IDs of the documents, as well as the documents themselves. It returns a list of a list. Since I only asked for one, I'm just grabbing the 0th index of the list, and then the 0th index of that.

The data model

Note that something interesting happens when you first run this. Look at this line from the output:

/root/.cache/chroma/onnx_models/all-MiniLM-L6-v2/onnx.tar.gz: 100%|██████████| 79.3M/79.3M [00:02<00:00, 36.0MiB/s]

What happened? ChromaDB downloaded the model and saved it into our local storage area!

When you run the code again...

When you run the code again, you'll get some annoying lines about how the corpus documents have already been added to the sqlite3 database:

WARNING:chromadb.segment.impl.metadata.sqlite:Insert of existing embedding ID: 0

WARNING:chromadb.segment.impl.metadata.sqlite:Insert of existing embedding ID: 1

WARNING:chromadb.segment.impl.metadata.sqlite:Insert of existing embedding ID: 2

...

You'll get one warning for each document. There are ways to disable warnings; you can google that part yourself.

Now generate a response

The system as we've built it so far is quite usable, and in the case of an employee handbook, we might want to stop here. (In situations like this, we probably want to just give them the exact quotes from the handbook, rather than ask something like ChatGPT to generate a more concise -- but possibly incorrect -- answer.) But let's take it to the next level and complete the RAG system!

For this step, instead of installing a complete LLM and querying it, we'll use an API, specifically OpenAI's GPT AI. (This requires you go to their site and set up a key.)

Now this step gets a little interesting, because we're actually going to build a highly specialized prompt that asks ChatGPT to answer the question based on the documents we're passing through it.

NOTE: This is where you may want to consider returning multiple documents if your corpus is big enough; the general idea is that you would have several documents that each touch on the answer, and ChatGPT will use all of them to generate a concise response. For this sample we're just sending a single document.

To do this we'll use a multiline string with the "f" formatting. Here's the updated code; I added an import for the openai library. (Colab already has that library installed, so we don't need an additional pip install line.) And then I added a line continaing the OpenAI auth key.

Note: The API isn't free. If you run the code below and get an error "You exceeded your current quota..." you'll need to add some money to your account. (You can go as little as $5. You can add funds here: https://platform.openai.com/settings/organization/billing/overview )

Continuing with our code; at the end of the previous code, I commented out the line that prints out the document since we don't need that at this point. Then I have the code for sending a carefully constructed prompt to ChatGPT. There's really not much to this step; we build the prompt, including our collection of documents (in this case just one), and then we get back a response along with some additional data, and we print ou the answer.

Notice when I build the prompt I'm specifying that the response should be two sentences, and a friendly, casual tone. You're welcome to adjust all this as you see fit and based on your use cases.

import chromadb

import urllib.request

import openai

openai.api_key = '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'

# Load the corpus

url = 'https://github.com/wecodian/rag_demo_python/raw/refs/heads/main/employee_handbook.txt'

with urllib.request.urlopen(url) as response:

file_content = response.read().decode('utf-8')

documents = file_content.split('---')

# Initialize Chroma

chroma_client = chromadb.PersistentClient(path="./chroma_db")

collection = chroma_client.get_or_create_collection(name="documents")

collection.add(

documents=documents,

ids=[str(i) for i in range(len(documents))]

)

print()

query = input('What would you like to know?')

results = collection.query(query_texts = [query], n_results = 1)

# print(results['documents'][0][0])

context = results['documents'][0][0] #"\n".join(results['documents'])

prompt = f"""You are an AI assistant.

Use the following context to answer the user's question.

Please answer in two sentences and no more.

Please make your answer friendly, casual, and supportive.

Context:

{context}

Question: {query}

Answer:"""

print(prompt)

completion = openai.chat.completions.create(

model="gpt-4o",

messages=[

{

"role": "user",

"content": prompt,

},

],

)

# Uncomment the next line if you want to see the entire response object.

#print()

#print(completion)

print()

print(completion.choices[0].message.content)

Notice I also have some commented out code at the end that you're welcome to uncomment if you want to see the full response object.

When I ran this, I asked the simple question:

"How do I log into my computer?"

And here's the answer I received:

"To log into your computer, just power it on, enter your username and password, and follow any prompts for multi-factor authentication if needed. If you hit any snags, don't hesitate to reach out to the IT Helpdesk for a helping hand!"

Compare this to the old days

Pretty nice! So let's think about this for a bit. In the past, prior to AI, we pretty much were forced to do "full text searches" where we had to just type in a word or phrase and look for documents that had exactly those words. That worked okay, but only as long as we chose words that actually existed. Let's try the above again, but with a slightly different question:

"How do I log into my workstation?"

In this case, it finds the same document explaining how to log into the computer. Except: The document doesn't actually use the word workstation, yet it still found it! That's the power here behind sentence transformations. The model knows words based on context, and even though I didn't say "computer" it still figured out the right document.

The Technical Details

About the pip install line

First things first: After you install the chromadb library, it will remain active for your current session -- even if you get disconnected due to idling too long. When you get disconnected, as soon as you run a cell, you'll get reconnected. However, if you step away from your computer for too long (I'm not sure the lenght of time; for me it was over night), the next time you connect you'll get connected to a fresh virtual machine. And when that happens, you'll need to rerun the "!pip install chromadb" line. (This is why I recommend putting that pip install line in its own cell at the top of the notebook.) How will you know you need to re-run the line? You'll get an error with code "ModuleNotFoundError" on the "import chromadb" line. Easy enough; you get the error, just re-run the pip install line.

I won't go into extreme detail here on how the sentence transfomers model generates embeddings (the vectors) for a sentence. However, let's at least get a brief idea of what's happening so we're not completely in the dark.

(The good news is the Sentence Transformers library, which is the one we'll be using today, does this for us.)

Calculating Embeddings

Before we build our app, we need to talk about the embeddings, those vectors generated by the Sentence Transformers library. When we process what we call our "corpus" of information, we build a huge volume of vectors for the sentences making up the documents. Those vectors need to be stored some place. As these are just essentially arrays of numbers, they don't need an AI model to store them in. Instead we'll use what's called a vector database.

Next, when somebody enters a prompt, we have to similarly transform their prompt into vectors. But instead of storing them in the vector database, we'll search the existing database for similar vectors based on what's called cosine similarity.

Cosine Similarity

Let's talk about cosine similarity. This is really just a matter of taking the cosine between two vectors. When we think of vectors in two dimension, we're talking about two lines, each with a starting point and an ending point. If you studied vectors in college, you may recall there are two ways to represent them; one is using two numbers, a magnitude and a direction. Another is by simply using two points, the starting point and the ending point. Sentence transformres use the latter approach. Except the two points are in a much higher number of dimensions than two.

For example, in basic linear algebra, you might have on paper a vector that goes from (1.5, 2.5) to (4.6, 8.2). That's two points on the paper, and the vector is a line segment starting at one point, and extending to the second point. And because we're talking about two dimensions, each point has two numbers, one for the X axis, and one for the Y axis. But to simplify matters, when calculating, for example, sine and cosine, between two vectors, a common practice is to "move" the vector so that one of the points is at the center, (0,0). If you do that with all the vectors you're dealing with in a math problem, then they all extend from the center, or the origin, of the graph. And that means each vector need only be represented by a single number.

For example, if we "move" our earlier vector, (1.5, 2.5) to (4.6, 8.2) so that it's pointing out from the origin, we would end up with (0,0) to (3.1, 5.7) (I just subracted X from X and Y from Y).

Now in Sentence Transformers, we're not just talking about two dimensions. We're talking 768 dimensions, or even 1024 dimensions. And all the vectors extend from the origin. That means all the vectors can be represented with a single "point" in this higher space, consisting of either 768 or 1024 dimensions. And so we might have a single vector, extending from the origin, that looks like this:

(2.7, 2.3, 5.6, 3.9, 1.1, 0.5, 0.8, 2.5, .......... )

Here I only did 8 dimensions, but you get the idea. This is the outer end of a vector starting at:

(0, 0, 0, 0, 0, 0, 0, 0, ........ )

Now when we have two vectors on just a two dimensional sheet of paper, it's easy to take the cosine of them, especially if we've shrunk them down to all sit on the unit circle. From there, we just calculate the "adjacent divided by hypotenuse."

But there's a catch we usually don't think about when we're learning this in school: We picture them on a right triangle, which means they've been condensed down to fit nicely inside a circle, like so:

[Image of right triangles fitting nicely inside a circle.]

If we have one vector that is much longer than the other, and they don't fit into a right triangle, we can't just divide the length of one by the length of the other. We'll get the wrong answer! Instead, we need to "normalize" the vectors and calculate their cosine using these steps. (And again, we're talking about two points, effectively, as the two vectors are sitting on the origin with the first point of each being (0,0).)

- We take the dot product of the two vectors

- We divide it by the product of their magnitudes

This is a bit more involved than just dividing the adjacent by the hypotenuse, but it's still quite easy, just some quick math. Calculating the magnitude is a simple matter of using the pythagorean theorem but in reverse: Take the square root of the x coordinate squared plus the y coordinate squared.

Suppose we have two vectors on paper (i.e. in two-space):

(3,4) and (5,6)

We want to calculate their cosine. Here are the steps:

- Computer their dot product by multiplying the x of each and the y of each, and taking the sum:

Dot Product = (3 x 5) + (4 x 6) = 39

- Calculate the magnitude of each and multiply the result:

Magnitude of 1st: Square root of (3x3 + 4x4) = 5

Magnitude of 2nd: Square root of (5x5 + 6x6) = 7.81

Product of the two: 5 x 7.81 = 39.05

- Divide the first by the second: 39 / 39.05 = 0.9987

Now for calculating the cosine for vectors in higher dimensions, we do effectively the same thing, except we'll have more terms in each. For example, if we're in four-space, we might have (3,4,5,6) and (10,2,7,8). The dot product is is simply 3x10 + 4x2 + 5x7 + 6x8 = 121. The magnitude are the square root of (3x3 + 4x4 + 5x5 + 6x6) = 9.27 and the square root of (10x10 + 2x2 + 7x7 + 8x8) = 14.73. And the product of these two magnitudes is 136.55.

So the cosine is the dot product (121) divided by the product of the magnitudes (136.55) to get about 0.886.

If you're curious, here's the formula:

The top is the dot product, and the bottom is the product of the magnitudes.

One thing to note is that even when you're operating in higher dimensions, you're still just dealing with two vectors. So the calculation is pretty simple.

Chunking Your Corpus

When breaking your corpus up into small chunks that you feed into ChromaDB, how big should you make them, and what guidelines should you follow for knowing where to break them?

The general rule of thumb is to break them up into chunks that are around 200 to 300 words, not more than 500 words. However, these numbers are flexible; what matters most is that each chunk is a standalone piece of information.

Conclusion

Building a RAG system isn't terribly difficult, but it's important that you take the time to learn as much as you can abuot the "innards" and how it works under the hood. Don't just type in the code and run with it; carefully learn how, for example, cosine similarity works, as well as concepts of which model is used when. Keep studying and you'll get it!